Acknowledgements

This work was supported by the National Key Research and Development Program of China under Grant 2018AAA0102002, the National Natural Science Foundation of China under Grants 61732007, 61702265, and 61932020.

Citation

In case using our NBA dataset or wish to refer to the baseline results, please cite the following publications.

@article{yan2020social,

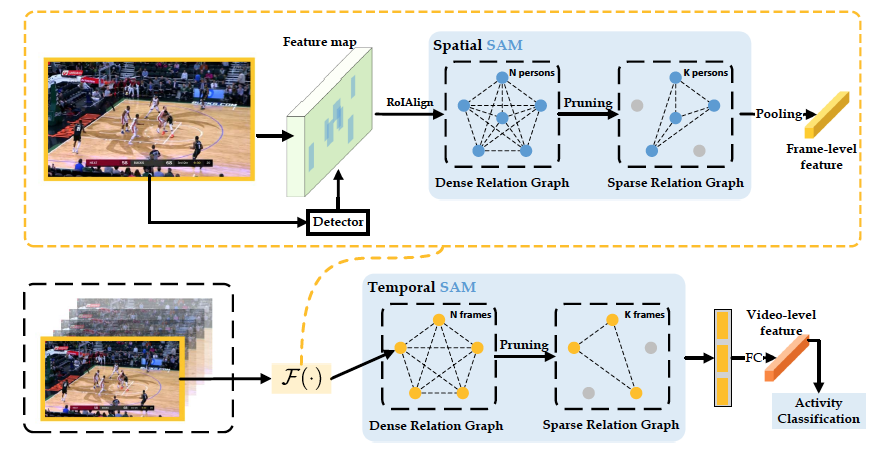

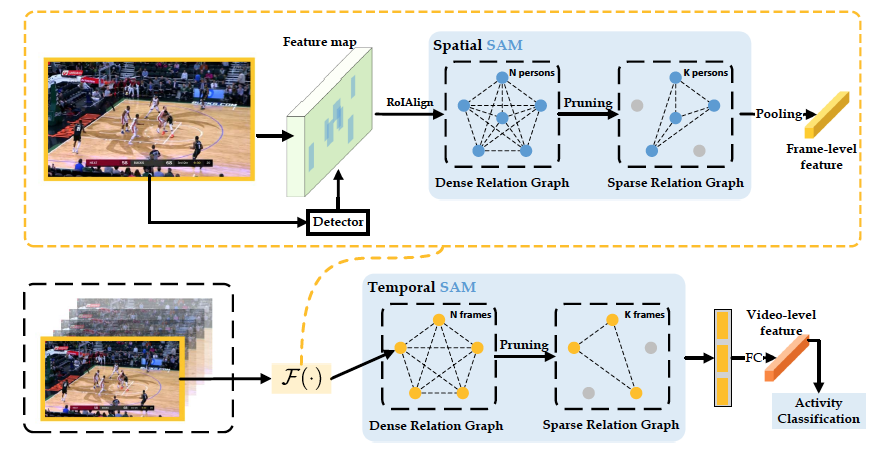

title={Social Adaptive Module for Weakly-supervised Group Activity Recognition},

author={Yan, Rui and Xie, Lingxi and Tang, Jinhui and Shu, Xiangbo and Tian, Qi},

journal={arXiv preprint arXiv:2007.09470},

year={2020}

}

@article{tang2019coherence,

title={Coherence constrained graph LSTM for group activity recognition},

author={Tang, Jinhui and Shu, Xiangbo and Yan, Rui and Zhang, Liyan},

journal={IEEE transactions on pattern analysis and machine intelligence},

year={2019}

}

@inproceedings{yan2018participation,

title={Participation-contributed temporal dynamic model for group activity recognition},

author={Yan, Rui and Tang, Jinhui and Shu, Xiangbo and Li, Zechao and Tian, Qi},

booktitle={Proceedings of the 26th ACM international conference on Multimedia},

pages={1292--1300},

year={2018}

}